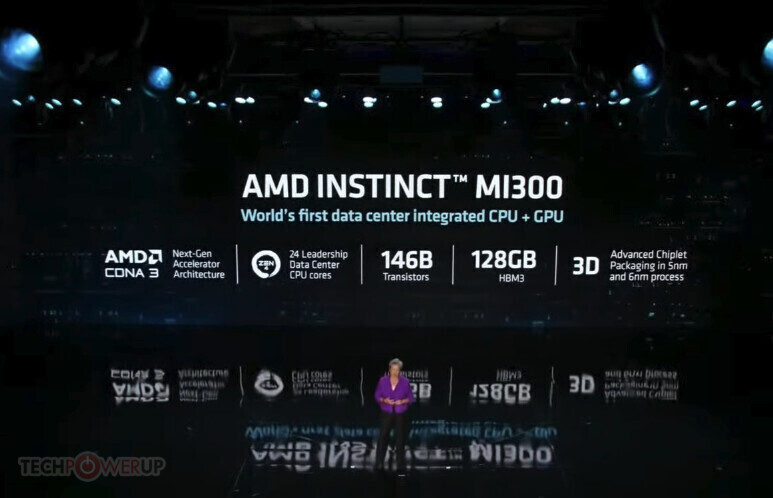

AMD Shows Instinct MI300 Exascale APU with 146 Billion Transistors

The Instinct MI300 APU package is an engineering marvel of its own, with advanced chiplet techniques used. AMD managed to do 3D stacking and has nine 5 nm logic chiplets that are 3D stacked on top of four 6 nm chiplets with HBM surrounding it. All of this makes the transistor count go up to 146 billion, representing the sheer complexity of the such design. For performance figures, AMD provided a comparison to Instinct MI250X GPU. In raw AI performance, the MI300 features an 8x improvement over MI250X, while the performance-per-watt is “reduced” to a 5x increase. While we do not know what benchmark applications were used, there is a probability that some standard benchmarks like MLPerf were used. For availability, AMD targets the end of 2023, when the “El Capitan” exascale supercomputer will arrive using these Instinct MI300 APU accelerators. Pricing is unknown and will be unveiled to enterprise customers first around launch.