¿Qué es el rastreo de rutas?? | ¿Qué es el rastreo de rutas?

Turn on your TV. Fire up your favorite streaming service. Grab a Coke. A demo of the most important visual technology of our time is as close as your living room couch.

Propelled by an explosion in computing power over the past decade and a half, path tracing has swept through visual media.

It brings big effects to the biggest blockbusters, casts subtle light and shadow on the most immersive melodramas and has propelled the art of animation to new levels.

More’s coming.

Path tracing is going real time, unleashing interactive, photorealistic 3D environments filled with dynamic light and shadow, reflections and refractions.

So what is path tracing? The big idea behind it is seductively simple, connecting innovators in the arts and sciences over the span half a millennium.

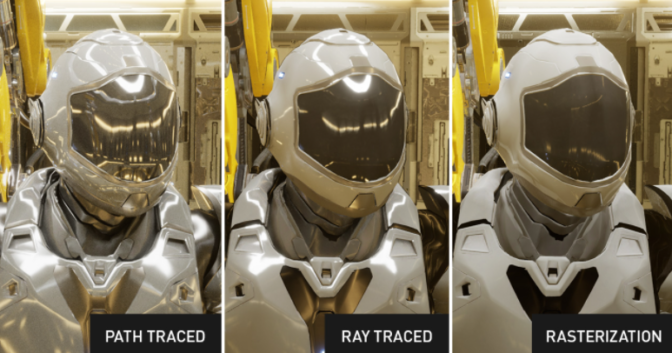

What’s the Difference Between Rasterization and Ray Tracing?

Primero, let’s define some terms, and how they’re used today to create interactive graphics — graphics that can react in real time to input from a user, such as in video games.

El primero, rasterization, is a technique that produces an image as seen from a single viewpoint. It’s been at the heart of GPUs from the start. Modern NVIDIA GPUs can generate over 100 billion rasterized pixels per second. That’s made rasterization ideal for real-time graphics, como jugar.

Ray tracing is a more powerful technique than rasterization. Rather than being constrained to finding out what is visible from a single point, it can determine what is visible from many different points, in many different directions. Starting with the NVIDIA Turing architecture, NVIDIA GPUs have provided specialized RTX hardware to accelerate this difficult computation. Hoy, a single GPU can trace billions of rays per second.

Being able to trace all of those rays makes it possible to simulate how light scatters in the real world much more accurately than is possible with rasterization. Sin embargo, we still must answer the questions, how will we simulate light and how will we bring that simulation to the GPU?

What’s Ray Tracing? Just Follow the String

To better answer that question, it helps to understand how we got here.

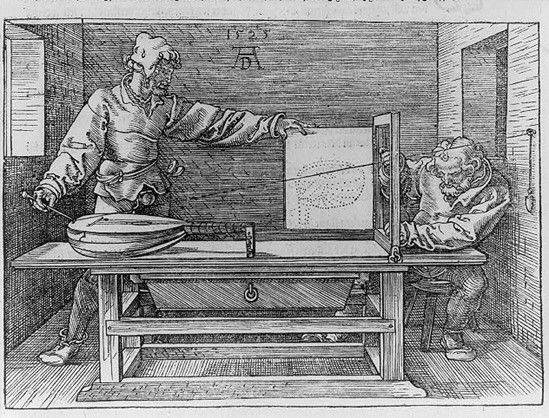

David Luebke, NVIDIA vice president of graphics research, likes to begin the story in the 16th century with Albrecht Dürer — one of the most important figures of the Northern European Renaissance — who used string and weights to replicate a 3D image on a 2D surface.

Dürer made it his life’s work to bring classical and contemporary mathematics together with the arts, achieving breakthroughs in expressiveness and realism.

En 1525 con Treatise on Measurement, Dürer was the first to describe the idea of ray tracing. Seeing how Dürer described the idea is the easiest way to get your head around the concept.

Just think about how light illuminates the world we see around us.

Now imagine tracing those rays of light backward from the eye with a piece of string like the one Dürer used, to the objects that light interacts with. That’s ray tracing.

Ray Tracing for Computer Graphics

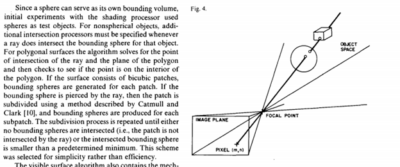

En 1969, mas que 400 years after Dürer’s death, IBM’s Arthur Appel showed how the idea of ray tracing could be brought to computer graphics, applying it to computing visibility and shadows.

A decade later, Turner Whitted was the first to show how this idea could capture reflection, shadows and refraction, explaining how the seemingly simple concept could make much more sophisticated computer graphics possible. Progress was rapid in the following few years.

En 1984, Lucasfilm’s Robert Cook, Thomas Porter and Loren Carpenter detailed how ray tracing could incorporate many common filmmaking techniques — including motion blur, profundidad de campo, penumbras, translucency and fuzzy reflections — that were, until then, unattainable in computer graphics.

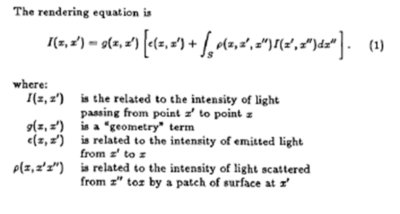

Two years later, CalTech professor Jim Kajiya’s crisp, seven-page paper, “The Rendering Equation,” connected computer graphics with physics by way of ray tracing and introduced the path-tracing algorithm, which makes it possible to accurately represent the way light scatters throughout a scene.

What’s Path Tracing?

In developing path tracing, Kajiya turned to an unlikely inspiration: the study of radiative heat transfer, or how heat spreads throughout an environment. Ideas from that field led him to introduce the rendering equation, which describes how light passes through the air and scatters from surfaces.

The rendering equation is concise, but not easy to solve. Computer graphics scenes are complex, with billions of triangles not being unusual today. There’s no way to solve the rendering equation directly, which led to Kajiya’s second crucial innovation.

Kajiya showed that statistical techniques could be used to solve the rendering equation: even if it isn’t solved directly, it’s possible to solve it along the paths of individual rays. If it is solved along the path of enough rays to approximate the lighting in the scene accurately, photorealistic images are possible.

And how is the rendering equation solved along the path of a ray? Ray tracing.

The statistical techniques Kajiya applied are known as Monte Carlo integration and date to the earliest days of computers in the 1940s. Developing improved Monte Carlo algorithms for path tracing remains an open research problem to this day; NVIDIA researchers are at the forefront of this area, regularly publishing new techniques that improve the efficiency of path tracing.

By putting these two ideas together — a physics-based equation for describing the way light moves around a scene — and the use of Monte Carlo simulation to help choose a manageable number of paths back to a light source, Kajiya outlined the fundamental techniques that would become the standard for generating photorealistic computer-generated images.

His approach transformed a field dominated by a variety of disparate rendering techniques into one that — because it mirrored the physics of the way light moved through the real world — could put simple, powerful algorithms to work that could be applied to reproduce a large number of visual effects with stunning levels of realism.

Path Tracing Comes to the Movies

In the years after its introduction in 1987, path tracing was seen as an elegant technique — the most accurate approach known — but it was completely impractical. The images in Kajiya’s original paper were just 256 by 256 pixeles, yet they took over 7 hours to render on an expensive mini-computer that was far more powerful than the computers available to most other people.

But with the increase in computing power driven by Moore’s law — which described the exponential increase in computing power driven by advances that allowed chipmakers to double the number of transistors on microprocessors every 18 months — the technique became more and more practical.

Beginning with movies such as 1998’s La vida de un bicho, ray tracing was used to enhance the computer-generated imagery in more and more motion pictures. Y en 2006, the first entirely path-traced movie, Monster House, stunned audiences. It was rendered using the Arnold software that was co-developed at Solid Angle SL (since acquired by Autodesk) and Sony Pictures Imageworks.

The film was a hit — grossing more than $140 millones en todo el mundo. And it opened eyes about what a new generation of computer animation could do. As more computing power became available, more movies came to rely on the technique, producing images that are often indistinguishable from those captured by a camera.

The problem: it still takes hours to render a single image and sprawling collections of servers — known as “render farms” — are running continuously to render images for months in order to make a complete movie. Bringing that to real-time graphics would take an extraordinary leap.

What Does This Look Like in Gaming?

Durante muchos años, the idea of path tracing in games was impossible to imagine. While many game developers would have agreed that they would want to use path tracing if it had the performance necessary for real-time graphics, the performance was so far off of real time that path tracing seemed unattainable.

Yet as GPUs have continued to become faster and faster, and now with the widespread availability of RTX hardware, real-time path tracing is in sight. Just as movies began incorporating some ray-tracing techniques before shifting to path tracing — games have started by putting ray tracing to work in a limited way.

Right now a growing number of games are partially ray traced. They combine traditional rasterization-based rendering techniques with some ray-tracing effects.

So what does path traced mean in this context? It could mean a mix of techniques. Game developers could rasterize the primary ray, and then path trace the lighting for the scene.

Rasterization is equivalent to casting one set of rays from a single point that stops at the first thing they hit. Ray tracing takes this further, casting rays from many points in any direction. Path tracing simulates the true physics of light, which uses ray tracing as one component of a larger light simulation system.

This would mean all lights in a scene are sampled stochastically — using Monte Carlo or other techniques — both for direct illumination, to light objects or characters, and for global illumination, to light rooms or environments with indirect lighting.

To do that, rather than tracing a ray back through one bounce, rays would be traced over multiple bounces, presumably back to their light source, just as Kajiya outlined.

A few games are doing this already, and the results are stunning.

Microsoft has released a plugin that puts path tracing to work in Minecraft.

Quake II, the classic shooter — often a sandbox for advanced graphics techniques — can also be fully path traced, thanks to a new plugin.

There’s clearly more to be done. And game developers will need to know customers have the computing power they need to experience path-traced gaming.

Gaming is the most challenging visual computing project of all: requiring high visual quality and the speed to interact with fast-twitch gamers.

Expect techniques pioneered here to spill out to every aspect of our digital lives.

Que sigue?

As GPUs continue to grow more powerful, putting path tracing to work is the next logical step.

Por ejemplo, armed with tools such as Arnold from Autodesk, V-Ray from Chaos Group o Pixar’s Renderman — and powerful GPUs — product designers and architects use ray tracing to generate photorealistic mockups of their products in seconds, letting them collaborate better and skip expensive prototyping.

As GPUs offer ever more computing power, video games are the next frontier for ray tracing and path tracing.

En 2018, NVIDIA announced NVIDIA RTX, a ray-tracing technology that brings real-time, movie-quality rendering to game developers.

NVIDIA RTX, which includes a ray-tracing engine running on NVIDIA Ampere and Turing architecture GPUs, supports ray-tracing through a variety of interfaces.

And NVIDIA has partnered with Microsoft to enable full RTX support via Microsoft’s new DirectX Raytracing (RPD) API.

Desde entonces, NVIDIA has continued to develop NVIDIA RTX technology, as more and more developers create games that support real-time ray tracing.

Minecraft even includes support for real-time path tracing, turning the blocky, immersive world into immersive landscapes swathed with light and shadow.

Thanks to increasingly powerful hardware, and a proliferation of software tools and related technologies, more is coming.

Como resultado, digital experiences — games, virtual worlds and even online collaboration tools — will take on the cinematic qualities of a Hollywood blockbuster.

So don’t get too comfy. What you’re seeing from your living room couch is just a demo of what’s to come in the world all around us.